Use of Generative AI in Legacy Comics

During 2025 the approach towards making illustrations have completely changed with inclusion of handcrafted 3D modeled items. You can read the new article here. Still, for the sake of keeping historical accounts, the old article about use of Generative AI has been preserved and can be read below.

The Artistic Intention vs. Generative AI

AI-Generated illustrations tend to have little to no perceived value compared to handcrafted traditional art. It is the case because differently from traditional techniques, a creator needs very little skills to prompt for an image compared to drawing one by hand. And it is completely true. You can see that image below was created with and extremely simplistic prompt… And it looks dashing!

Prompting for a random result:

“beautiful woman, sci-fi setting”

(Yes! This is the exact prompt for this image!)

But, there is a caveat here. This image has no creative value because it lacks any artistic intention. And it is completely unsuitable for any sequential project like a comic because of its randomness and impossibility to replicate by using similar means. On the other hand such short prompt can be used for brainstorming as the Generative AI will spit out thousands of completely random variations of a similar concept.

Creation of deliberate imagery with Generative AI requires a different approach to prompting. It is much more demanding on prompter’s art direction skills, but it also results in valuable output that is coherent with creator’s artistic intention. Illustration below features an original character called The Huntress.

Prompt for this image includes even the smallest details that make the AI-Generated output consistent with the original concept. As a result, this image features both: a much better gamma of complementary colors, and visualizes a photorealistic rendition of a character that is visually consistent with the stylized version of The Huntress.

Prompting for a deliberate result:

“a shot from an 90s science fiction film. middle-aged athletic hairless Iranian woman, tanned skin, slim nose, brown eyes, bald head, angry expression, looking away. she is wearing a futuristic sharp angular black composite armor over a black carbon fiber undersuit, tall protective collar, sleek shoulder pads, wearing a white ceramic chest plate, black tactical gloves. holding a sleek futuristic white blaster rifle with a white stock, white rangefinder scope on top of it. she is standing in an action pose, looking away. lush purple alien jungle, red trees, blue mist in the background. action shot, cinematic shot, intricate details, film grain, in style of 90s retro futuristic aesthetics, masterpiece, hd, sharp focus“

Sophisticated prompting is rarely enough though for making imagery that can be efficiently used to assemble a comic. Even when User Interfaces for Generative AI can provide control over character’s pose, image perspective, and load pre-trained LORA’s for character consistency, they still fail to generate an output with sufficient fidelity to tell a complex story. This is where a skilled human touch is required.

Everything Begins with a Script

Before even touching the visualization tools, a coherent story must be written. Image 1 features a pretty early example of a script for NADIA #1. It’s format is very close to a screenplay. This approach turned out to be inefficient though and had to be simplified.

Currently, each comic begins not with a script, but with a detailed treatment – it describes each scene in detail, but most of dialogue is being left blank, except for the most important parts. The time saving aspect of such method lies in a fact that the dialogue that feels good and snappy in the head can become a total drag when placed over illustrations. And if 90% of it will be rewritten to match the rhythm of the illustrations… Why bother writing every detail down in the first place? Obviously, everything gets rewritten anyway, but lack of predefined dialogue allows for much more freedom during the scene composition.

When the treatment is complete, a mockup of a comic can be produced based on it’s contents. Earlier workflow was based on a library of low resolution AI-Generated images that were used as a base to assemble the comic. As of now, custom-built 3D environments with hand-animated 3D actors are used to pre-visualize the story.

Image 1 – Everything begins with a script.

Writing a detailed script for a comic is not a paramount, but it becomes very helpful in setting up the story and developing the narrative details.

On this image some lines describe what is shown on the illustrations, while others represent the dialogue. This script is not processed by LLM, but is used solely to account and retain all parts of the story.

Traditionally, in comics, a different format is preferred though – there all lines are written directly in speech bubbles over pre-visualized illustrations. This way every dialogue gets organically adjusted for its place on the page.

Crafting the Image Library of Raw Generations

Before the assembly of pre-visualized comics can begin, plenty of low resolution AI-Generated Images are produced by a “shotgun method” where multiple variants of a character/scene are generated at once. Differently from drawing by hand or working with 3D models, this approach opens up for much wider artistic exploration where AI-specific randomness provides much better options then intended.

Image 2 – There are only 9000 ways to create any scene.

After the outline is written, a concepting process begins. Appearance for each character is being planned and prompted.

Same prompt with different descriptions for the pose/angle/expression is used to generate a plethora of images to choose from.

As image 2 illustrates, very few of total amount of AI-generated images are good enough to become illustrations in the comic. Most are kept strictly for pose/prompt reference or future use.

Unfortunately, above mentioned method is good only for creating simpler character-focused imagery. Illustrations with complex poses, unusual angles or multiple characters require a different approach.

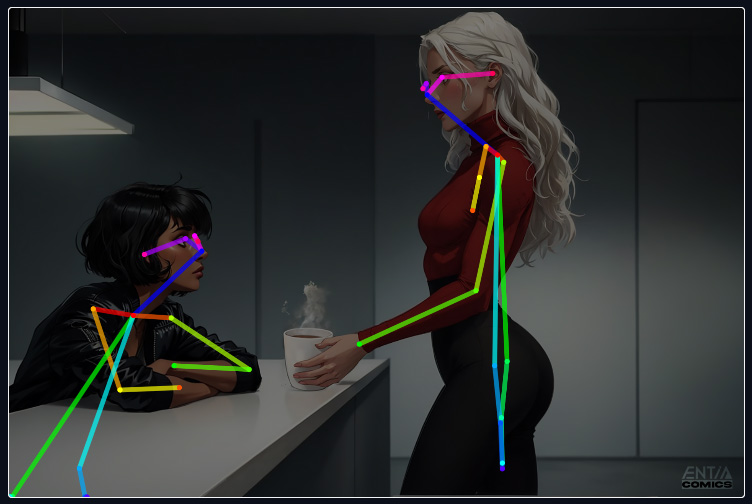

Image 3 – Manual scene composition.

Illustrations where poses and angle are too important to be randomized by AI are made with the help of ControlNet. It allows deliberate placement of skeletons for each character.

When a critical mass of images for each scene from the outline is created, a mockup stage can begin. Thereafter more concepts can be generated if required by the story.

Assembling the Mockup of the Comic

During the Mockup stage, Raw images are inserted, adjusted and thereafter gradually replaced with their manually edited versions and thereafter the Final Art. Those edits feature replaced backgrounds, hand painted outfits, handcrafted details and other manual adjustments that make them ready for the AI-Assisted upscale to become the Final Art.

Image 4 – The mockup example based on the script from the Image 1.

Images are added to the pages in order to create a pre-visualization of the final comic. These pages are not final, but can give a really good understanding about what works and what is missing.

This particular example page is far from perfect:

- The top image of the city seems to be too small to properly give the reader a sense of place.

- Images in the door opening sequence lack variety.

- Elza’s reaction shot on the bottom is too narrow to effectively convey her emotions.

All these flaws will be corrected as the mockup evolves towards its final shape. On this stage, even whole pages can be added or removed in order to improve the pacing and visual flow of the comic .

Just like the final comic, the mockup is assembled inside the CLIP Studio Paint. This approach makes it very easy to finalize the comic by simply replacing the mockup’s material with complete illustrations. The journey from a Raw AI-Generated image to Final Art is depicted below.

From Raw AI-Generated Image to the Final Art

No single image created by ENTIA Comics is ever released without being significantly altered towards its final shape. This principle is important because of three reasons:

- The strong artistic direction behind each aspect of the Universe of ELZA.

- The hands-on manual approach allows to eliminate all inherent flaws of AI-Generated imagery while maintaining the AI-powered speed.

- Manual alterations and edits create a satisfying sense of ownership for the creator, while readers feel the care by looking at each illustration.

Image 5 clearly shows what king of journey that awaits each single illustration from being a Raw AI-Generated piece towards becoming the Final Art that is manually composed in Photoshop.

Image 5 – From AI-Generated image to an AI-Assisted Final Art in 4 steps.

Taking a detour from Nadia, this is a progression of an illustration featuring Kira from the graphic novel The DESCEND.

The first image is AI-Generated by using the “shotgun approach”. It is picked from the Image Archive. Her clothes and environment do not match the artistic intention, though so they have to be manually edited.

The Second image is much closer to the desired result as it features the right pose, angle, clothes and the background. The red patch on Kira’s shoulder is an original handcrafted design.

Third image is an intermediate AI upscale. Here Kira is successfully matched with the environment, but some artifacts appear. Parts of this image will be further edited and processed with AI before being manually combined with handcrafted elements.

Fourth image is the Final Art. It is made of multiple AI-Upscaled elements that are both handdrawn and based on AI-Generated imagery:

- The spaceship is back in the background.

- Her red shoulder patch is consistent with the original design while being seamlessly stylized.

- Rebecka can be seen studying a plant in the background.

All these steps provide impeccable control to the creator and turn AI-Generated imagery into a form of handcrafted digital collage. Image 5 shows that no compromises on color, shape, nor form were made during the creation of the Final Art – the end image becomes exactly what the author have intended.

The Final Result

After all images were developed into the Final Art, they can be inserted into the comic while replacing the imagery from the Mockup phase. This part of the process opens up for even more adjustments in order to achieve the best final result. Plenty of those changes can be clearly seen when comparing Image 5 and Image 6.

Image 6 – The finished panel of a comic.

As the final step, all complete illustrations are being added together by replacing those previously shown AI-Generated images inside the mockup comic.

Here are the key differences between this final product and the Mockup:

- The image of exterior is moved to a separate page. Instead, an interior image of Nadia’s apartment was added to provide an even better sense of place.

- Characters did also get some more room to express themselves.

- Text was added inside the speech bubbles.