Use of Generative AI by ENTIA Comics

TL;DR: I’m committed to do as much as I humanly can to make this project an authentic creation. I use a fine-tuned locally-run AI restrictively, only in those least creative areas where it’s crucial to get things done.

Introduction – Not All AI’s are the Same

Adoption of Generative AI to the mainstream, that took place in early 2020s, can be compared with technological revolution of 1980s. It is when personal computers became mass available to private users and companies across the world. Such strong reception of generative tools was caused by their ability to create substantial output based on a relatively small input from the user. This way, a machine became capable of conducting even more complex tasks, and unprecedentedly generate artistic products that formerly required a skillful human specialist with years of education and experience. Just like back in the 80s, not all people were happy to encounter a sudden paradigm shift – some felt threatened by the new technology. Others, like yours truly, – saw a field of endless opportunities!

In context of this article there can be identified two types of Generative AI’s:

- Cloud-Based AI Models – online-only services like ChatGPT, Midjourney, Google Gemini, etc. These AI Models operate solely from corporate data centers and are constantly evolving through collection user data and deliberate training. Because of that, such AI Models provide extreme versatility to the user, but can be also seen as unpredictable. Users of a Cloud-Based AI Model have no real insights into varying factors that affect its reasoning. Also, granted legal liabilities, services that provide access to such AI Models have to maintain harsh censorship that restricts creative use of their product.

- Because of these shortcomings and real creative needs, ENTIA Comics does not use Cloud Based AI Models. The only exceptions from this rule are: basic grammar correction, and some small tasks that require more compute then a local system can handle.

- Locally-Run AI Models – these AI models are designed to operate on affordable consumer-grade personal computer. To be compatible with such limited hardware they are thousands of times smaller compared their Cloud-Based counterparts. Because of these size restrictions, each type of Locally-Run AI Models is really good at a very narrow range of tasks. Good examples of them are Stable Diffusion 1,5, SDXL, FLUX and Lllama. Locally-Run AI Models can also be custom trained to expand their capabilities, add to their stylistic output, and remove all censorship.

- ENTIA Comics uses only open-source Locally-Run AI Models in their production pipeline. The signature Style AI Model that upscales all illustrations before their final edit is based on a Stable Diffusion derivative and is custom trained to maintain the unique style of the Universe of ELZA.

Power and perceived autonomy of AI-Powered tools enable human creators to minimize their agency while maintaining a coherent artistic output. But is AI merely a tool then, or can it be regarded as a co-creator of pieces the human operator prompts it to render? And if so, who is the “real” creator then – the machine, or the living human who is guiding it with prompts? These are valid questions and they require a thorough disclosure about use of generative AI by ENTIA Comics.

AI-Free Elements in Creations by ENTIA Comics

Currently available Generative AI Models are not self-aware and base their reason on a vast, but limited training data. Unlike human mind that is constantly learning and evolving, AI is restricted to its defined parameters and thereby can not produce anything beyond median value of that pre-learned data.

It means that during creation of unique artistic product (like comics), Generative AI can be effectively used only as a powerful tool to automate narrow skill-heavy tasks, like detailed rendering of illustrations, or text correction. On the other hand, broad tasks that require agile reasoning, like original art creation, ideation and writing are still best performed by a human author.

Therefore in all products by ENTIA Comics, conceptualization, ideation, story- and world building are AI-free. And it will remain so independently from the upcoming technological advancements for one simple reason: Art is meant to surprise and inspire – therefore a machine that is built to serve, by its definition can not be “alive” enough to produce compelling art. Below are few examples of AI-Free creations by ENTIA Comics.

Concept Art and Design

The Universe of ELZA in its current iteration can be defined as a grounded space opera with strong internal laws that are based on real life concepts. It means that spaceships weapons and architecture in this setting can not be completely whimsical and have to be based on very deliberate design principles and internal logic of the Universe.

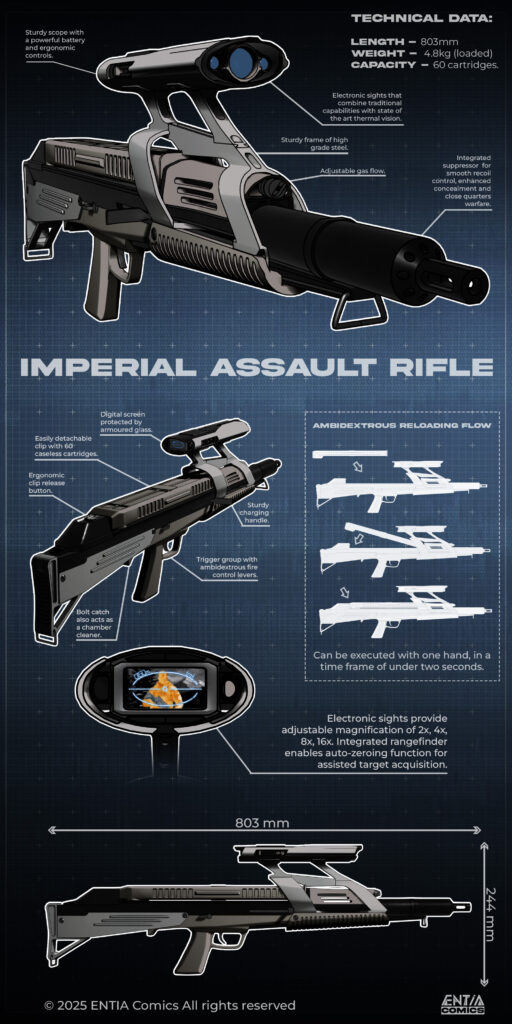

A concept art featuring multiple assault rifle designs. All of them were created in Photoshop based on images of real life weapons. Therefore each design is logically plausible while maintaining unique looks.

Because of these strict requirements to each unique design, there is no room for randomness that is so prevalent in AI-generated output. Every design has to be created by hand with use of real life references and imagination.

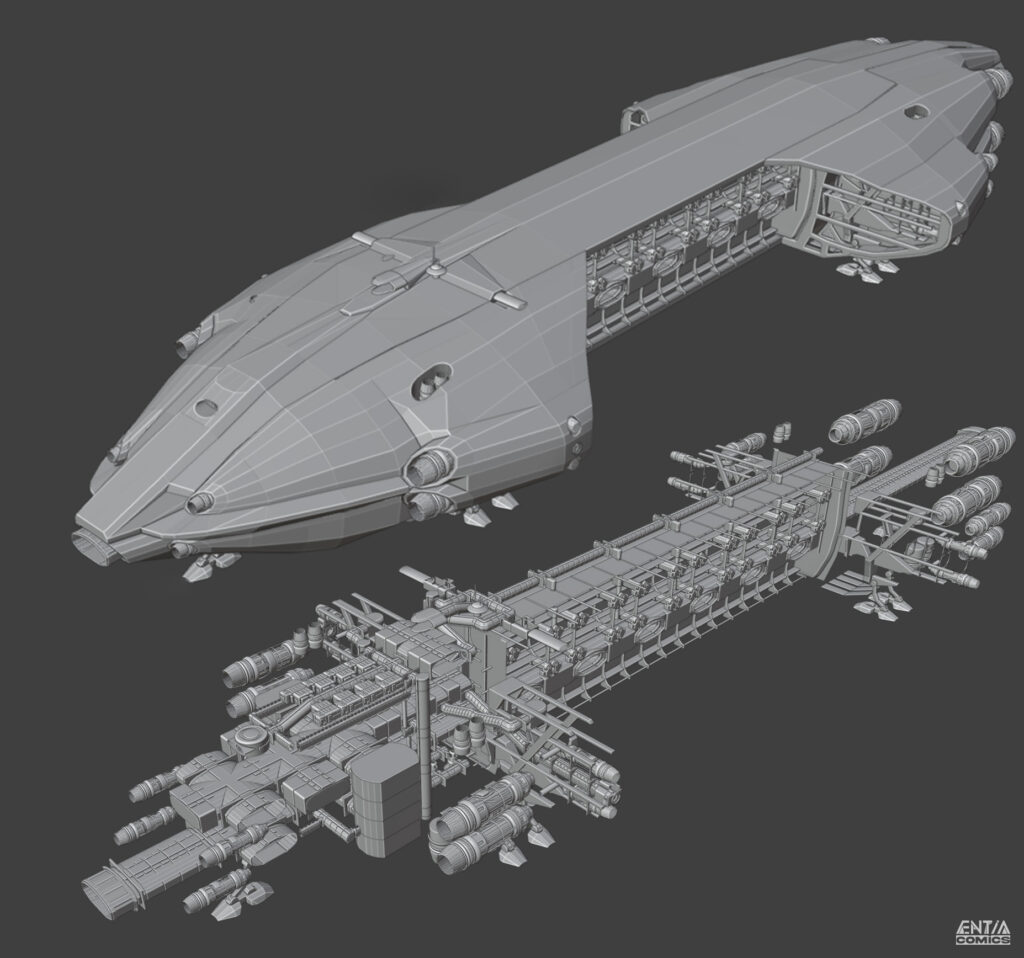

This is a intermediate picture of 3D model for an Imperial Large Transport Ship called Agna. It features both an unique exterior of the ship and its fully realized interior. There are too many artistic-, narrative- and aesthetic factors involved in creation of such model for any currently available AI to grasp.

The other aspect is complexity. Generative AI (if it’s not custom trained on this specific task) can not grasp more complex concepts like a mix of stylistic, narrative and engineering factors that drive the design process of a complex item like a spaceship, weapon, or a building. And even if AI would be up to the task, the inability to adjust the smallest details of an AI-generated output would make it much more restrictive then what is produced by a skillful human.

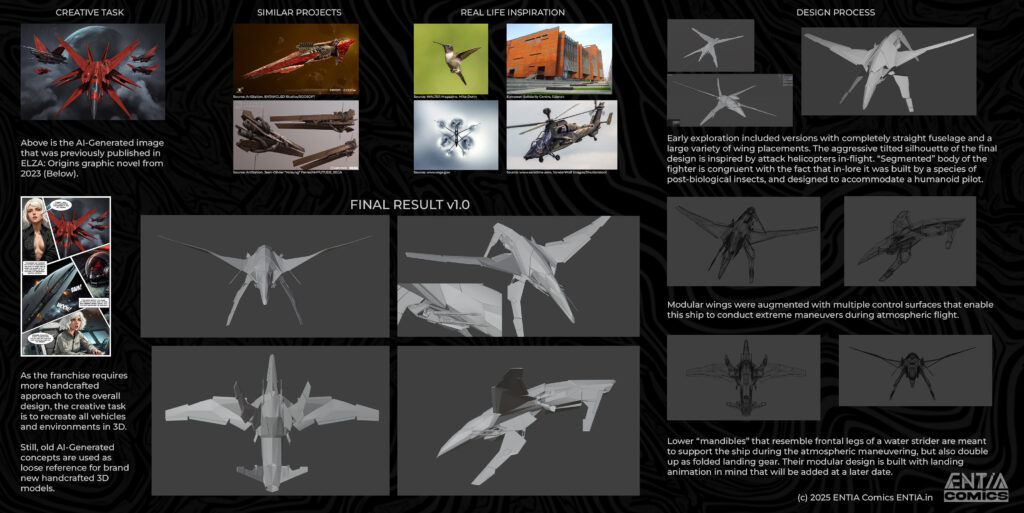

A Design Process breakdown for the Red Fighter including the Creative Task, Research Phase, Reference Work and the Final Product as a 3D model.

Designing something from scratch is a difficult process though. A big chunk of time is spent on gathering of real life references and inspiration pieces from similar projects. All this time could be saved by using a Generative AI to design less complex spaceships and props, but it would remove a big part of creative praxis, which is crucial for developing authentic designs and making more artistically vibrant comics.

Also, the Universe of ELZA is planned to be a decade long project and many of its designs are destined to become iconic. Because of that it is important to maintain human authorship behind them.

Exceptions – Use of Generative AI in the Design Process

The concept process of the Rescue Suit for Elza. From an AI-Generated dummy, to hand drawn variations, to an AI-Upscaled final result.

AI can still prove to be useful in the conceptualization process though, but only as a time-saving tool. Above is an example of ideation behind the Imperial Standard Rescue Suit. The idea has always been that Elza would wear an orange overall with straps to reference costumes of Soviet cosmonauts and pilots.

A plain generic orange overall was successfully generated by AI as a base for this design. (Left part of the image) Drawing something so simple by hand would unnecessarily consume time and effort, that could be used for more creative tasks. In order to turn this plain outfit into a unique design, few variations has been hand drawn over it. (Middle part of the image)

Each of these variants was tested for compatibility with an AI-Upscaler. Some of them produced underwhelming results as the AI Model did not stylize well the two asymmetrical options. The concept that was working best with the AI-Upscaler was chosen for the comic. (Right part of the image)

This was an example of a design process where AI is used to automate a non-creative part (drawing a plain orange overall), but does not impact the final result or in any way restrict the creativity of a human author. (Except for technical incompatibilities of some designs with an AI-Upscaler, but it is a production-related restriction.)

Writing

Writing can be both the hardest and easiest part in the creative process. But regardless of purpose behind the written piece, be it a simple flavor text, outline, script, or a multi-page article – Generative AI is excluded from any kind of writing by ENTIA Comics. The reason is simple – AI is so overused for copy writing and brainstorming that everything that is published online begins to look the same. And because humans love novelty, sense of “real” language becomes much more attractive for reader’s eye then any “perfect” AI-powered result.

Also, the Universe of ELZA is not a sales/marketing startup, but an entertainment project. Stories withing it are supposed to awake reader’s curiosity and ignite them with ave. It’s doubtful that anyone is really curious about reading something that is written by a soulless machine, or would enjoy a generic story that leads nowhere. (Except for novelty-driven research cases, of course.)

Generative AI is of little use for the worldbuilding and storytelling purposes either, as it is mostly good at providing ready solutions that usually represent the most mediocre option of all possible outcomes. Neither it is good for conducting research unless it can reference all sources that were used for information gathering.

Because of that, stories and articles from the Universe of ELZA are written in the most traditional way possible. Each of them is a result of multiple drafts, prolonged research, and is usually based on: personal experiences, inspiration from films, books, news, and scientific articles that are gathered from credible sources.

The only time when AI is being involved in writing is during the grammar check by tools from Google (Google Docks/auto correction) and when searching for authentic sources/articles to read. (Google Gemini with Google Search)

3D Modeling and Rendering.

All unique items in the Universe of ELZA like Weapons, Props, Interiors, Armors and Spaceships are handcrafted 3D models. The only exception from this rule is some Architecture (buildings, cityscapes) and Landscapes. The latter two are expected to become completely handcrafted too in the future.

The reason for such hands-on approach (pun intended) is simple. Just like the writing and worldbuilding are vessels of ENTA’s soul that fills this project, the design language and environmental storytelling have an important function of making the Universe of ELZA stand out on its own in the field of similar projects.

Each 3D model by ENTIA Comics is made from scratch in Blender 3D (official site). These are results of thorough design process and are meant to amplify the narrative of comics by providing the Universe with unique and memorable designs. This process takes time though, as high fidelity 3D modeling is pretty time consuming. Around 50-70% of production time for each comic is expected to be spent on pre-production including the design process and work on the library of 3D assets.

Only when all handcrafted parts are readily modeled and the story is written in the final draft, the actual production of the comic can begin, – only then does Generative AI enters the creative process.

On actual use of Generative AI by ENTIA Comics

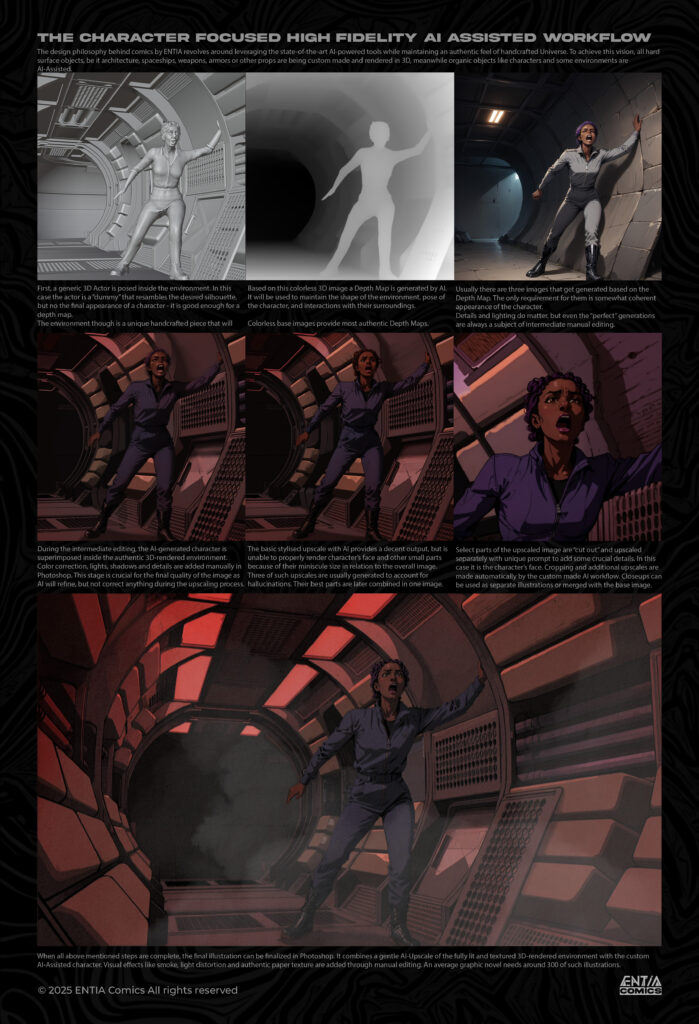

As of 2025, the workflow behind illustrations by ENTIA Comics has been completely revamped from the ground up. From now on, every image is being pre-visualized in a 3D environment with manually posed 3D actors.

For more abstract environments, like this forest, simpler 3D environments are used. They are not textured as their sole purpose is to provide a depth map that is used as a base for an AI-generated surrogate image.

Merging of the AI-Assisted Characters with the 3D Environment

In total there are two types of 3D environments that are used for the comic: Abstract and Specific:

- Images made in Abstract environments, like the forest on the image above, do not require high levels of background consistency and thereby can be fully AI-generated based on an non-textured 3D image. It saves time that would otherwise be spent on lighting and material creation. Because even if AI-generated results provide inconsistent colors and lighting – it all can be easily adjusted in Photoshop before the final upscale.

- Specific environments, like interior on the image below, require much higher level of background consistency. Like in a movie, if some elements of a fixated background wander from left to right or disappear on different illustrations, it can create an immersion-breaking experience for the reader. Thereby a high fidelity 3D model of the specific environment is used for the background. Still, at times 3D render comes with unwanted artifacts and roughness. These can be smoothed out with a gentle AI-upscale that revamps materials while maintaining the overall image. The final illustration is usually a mix of 3D render and AI-upscaled parts that are merged in Photoshop.

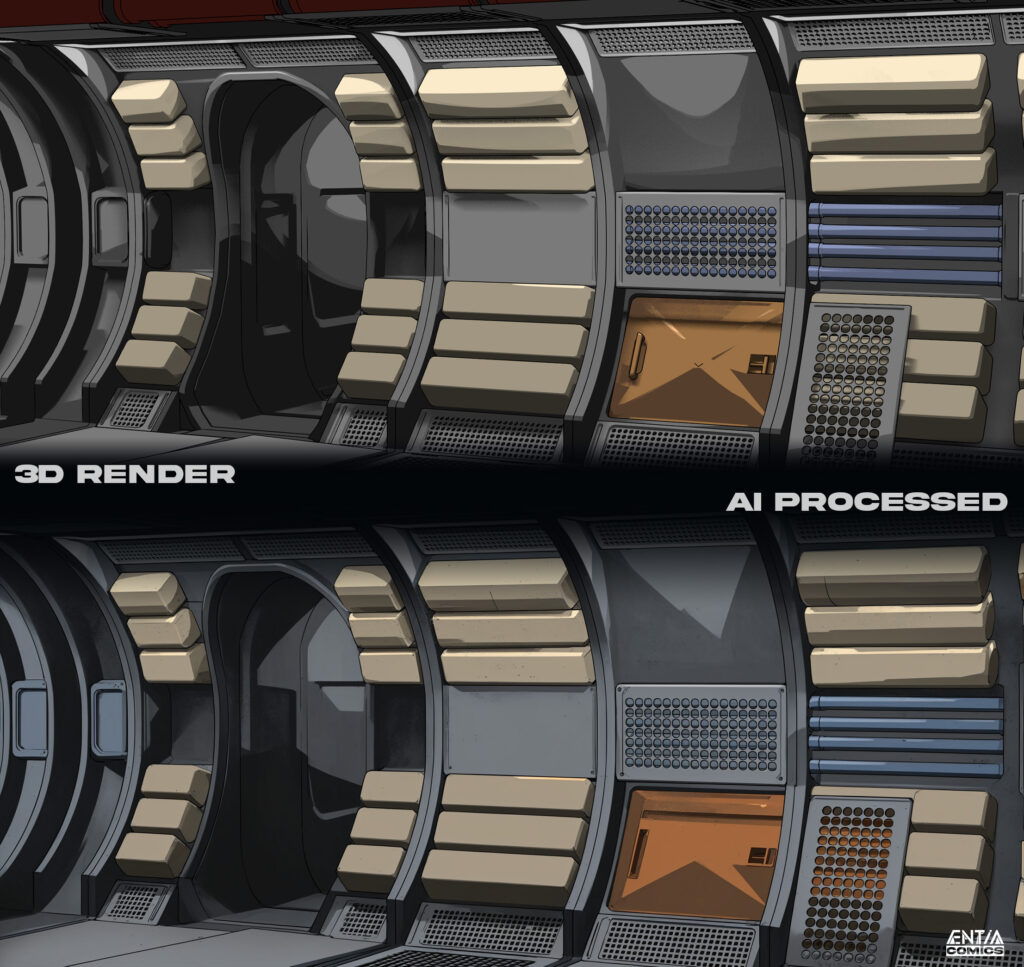

This spaceship corridor is a good example of a very Specific environment. It could be used on the pages of a comic as-is in its pure 3D-rendered form.

But it benefits highly from a gentle AI-upscale with the same style-trained model that renders characters of the comic. This way both characters and environments remain stylistically congruent despite different techniques behind them.

Characters in this workflow remain AI-assisted because the Generative AI can be really good at rendering humans and basic clothing. Some important details like colors for skin, hair, clothes and lighting though are manually drawn over the AI-Generated surrogate. It is crucial to maintain its consistency with the environment and adds some extra human touch to the illustration. The full process of combining the AI-Assisted character with the handcrafted 3D environment is presented on the image below.

Illustrations are made by superimposing the handcrafted 3D rendered environment with AI-generated character(s). After a intermediate visual edit a collage is processed with stylistically congruent AI-model. Composition of final illustration is finalized in Photoshop and includes pre-made 2D elements like props/light/fire/explosions/smoke – these are carefully layered over and blended with the upscaled image.

Character Work

While characters, as mentioned above, are AI Assisted, there is little to no space for random details in their appearance.

Such high standard of consistency and quality can be achieved by multiple ways:

- Manual editing – in the legacy comics, characters sported relatively simplistic appearances, because most customization was done by manually drawing over the AI-Generated images. This method was very time consuming and not viable for more complex outfits. This is why now, when each character appearance became an increasingly handcrafted piece, a more sophisticated approach was needed.

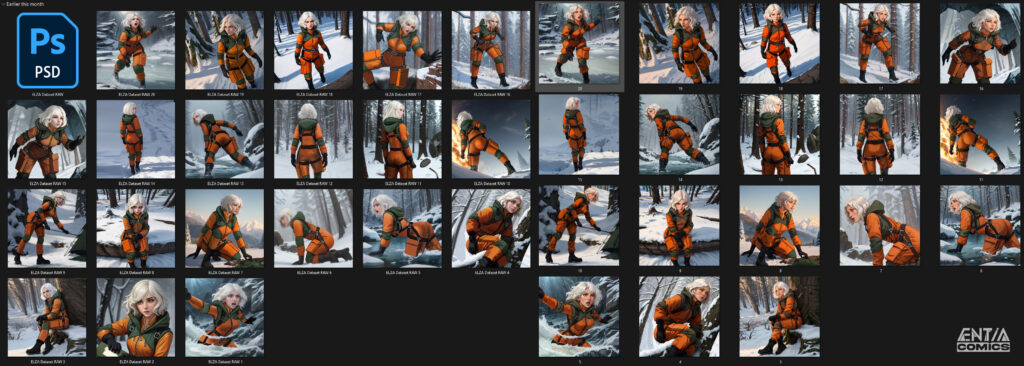

- Creation of a LORA – it means training of a small add-on to the base AI Model. This addition is trained to depict a specific character or concept. While LORA is not a silver bullet that removes all need for manual editing, it provides just enough of general details for the AI-Generated charters to avoid most of AI-related randomness and minimize the manual editing before the final AI-Upscale.

An example for the image dataset featuring Elza in her emergency suit. Manually re-drawn images are on the left side. AI-Upscaled illustrations that were used to train LORA are on the right.

There is a catch though – training a Character LORA requires around 20 finished high resolution illustrations of the titular character. And just like in the good old times those do still require extensive manual work for their production. Fortunately, as a graphic novel needs hundreds of singular illustrations, needing to draw only twenty of them is still an extreme time saver. (Image above)

Following this method it becomes possible to consistently generate characters with deliberately designed complex outfits that require very little manual editing before the final AI-Upscale.

The Question of Value – AI vs. Human

The perceived value of a manuscript is based on the quality of its writing, an illustration is equally valued because of the craftsmanship behind its creation. But the mere skill of the creator is not the only factor that dictates value of their creations, there is also a matter of an idea!

Plenty of mainstream productions have proved a thesis that no matter how much money or man-hours of world’s best craftsmen were spent on a film, series or a comic, if they have no strong thematical contents and valuable ideas behind them, such product is doomed to be once consumed and later forgotten by the audiences.

The history have also proven that unique and well-developed idea may turn a debut novel of an newbie writer into a best seller, or make a film/series of mediocre visual quality into a cult classic! Same thing can be stated about the comics, or sequential art (link/en.Wikipedia.org) that encompasses communicative qualities of both visual art and literature.

But what happens with the perceived value when Generative AI gets involved? The answer is pretty simple – it replaces a part of the human labor in the creative process, and thereby affects just that aspect of product’s value. This way a completely AI-Generated product may be recognized as aesthetically pleasant, but perceived as devoid of any real value because it has nor human craftsmanship or any valuable ideas behind it’s creation.

This trend can be clearly seen in games with procedurally generated locations like Strafield (link/bethesda.net)– players value exploration in those much less then in handcrafted experiences akin Skyrim or Fallout 4 (link/bethesda.net). Even if the latter was procedurally generated at its base and thereafter manually built upon by the game developers up to its final shape.

It means that if AI is involved in creation of a piece of art, the only way to maintain its perceived value is to restrict use of AI to a very defined and narrow part of the final product. Such product where both human creator and the machine are equally involved can be classified as AI-Assisted, because in this case Generative AI becomes a mere tool or executioner meanwhile the human maintains the role of an author.

In regard of comics, such AI-Assisted approach may appear in cases where either: an artist who draws illustrations by hand asks AI to supply the story with witty dialogue, or when a writer illustrates their stories with AI-Generated imagery, and everything in between. The general rule seems to be that the more of human craftsmanship is involved in a product, the higher its perceived value will become.

Because of this value focused approach, the Universe of ELZA features AI-free handcrafted narrative together with AI-Assisted illustrations. It means that every article and every dialogue in the final product is written by a human. Also, all character-, item- and location designs are deliberately created by a human even though they may be based on AI-Generated imagery.

In cases where images are fully AI-Generated, they tend to bare little significance to the final artistic output, be it a background image of a generic snowy forest or a closeup variation of a character’s facial expression. These images though are still carefully prompted to represent very specific ideas of the human author, and thereafter are heavily altered by hand before they can be seen by the reader. It means that final illustrations of the comic contain very little to no random elements that were not originally intended by the human author.

A thorough disclosure about all creative processes and techniques that are used by ENTIA Comics can be read below.

Conclusion

Generative AI is a powerful tool that can rival human craft, but it’s output has little to no perceived value if no human labor was added to it. This human-centric approach provides both comics and articles from the Universe of ELZA with authentic sincerity that no machine is capable of achieving. Because of that, even despite prominent use of AI tools, creations of ENTIA Comics maintain the high perceived value.

It is important to note though that while most of actors aim to optimize out human labor by replacing it with AI-powered tools, ENTIA Comics has a completely different vision: As of now, the use of Generative AI is vital to make the Universe of ELZA possible as it is created by a single human with limited artistic skills. As more demanding stories are written and more illustrations are assembled, those skills do also develop along with the project. It means that as the Universe of ELZA grows, it will feature even more of the human touch and less of AI involved in its creation. This is the vision. This is the promise.